GenDBWiki/IntroductionToGenomics

A brief Introduction to Genomics Research

After James D. Watson and Francis H. C. Crick described the structure of the DNA helix in 1953, the basic mechanisms of DNA replication and recombination, protein synthesis, and gene expression were rapidly unravelled. Technological advances like the invention of the polymerase chain reaction (PCR) and automated DNA sequencing methods have progressed to the point that today the entire genomic sequence of any organism can be obtained in a snatch. As of this writing, the GOLD database reports more than 900 organisms, including completely sequenced genomes and genomes for which sequencing is in progress. For more than 800 genomes the (partial) sequence is already available in the NCBI databases.

Genome sequence analysis

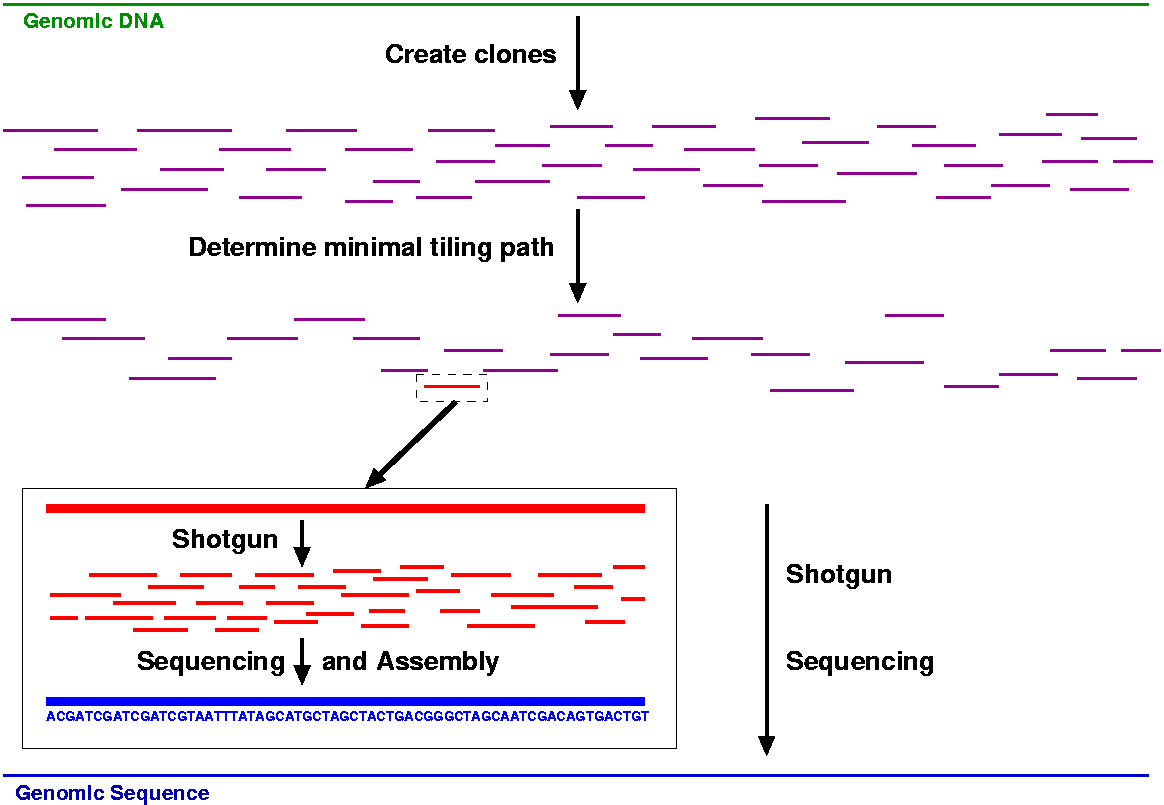

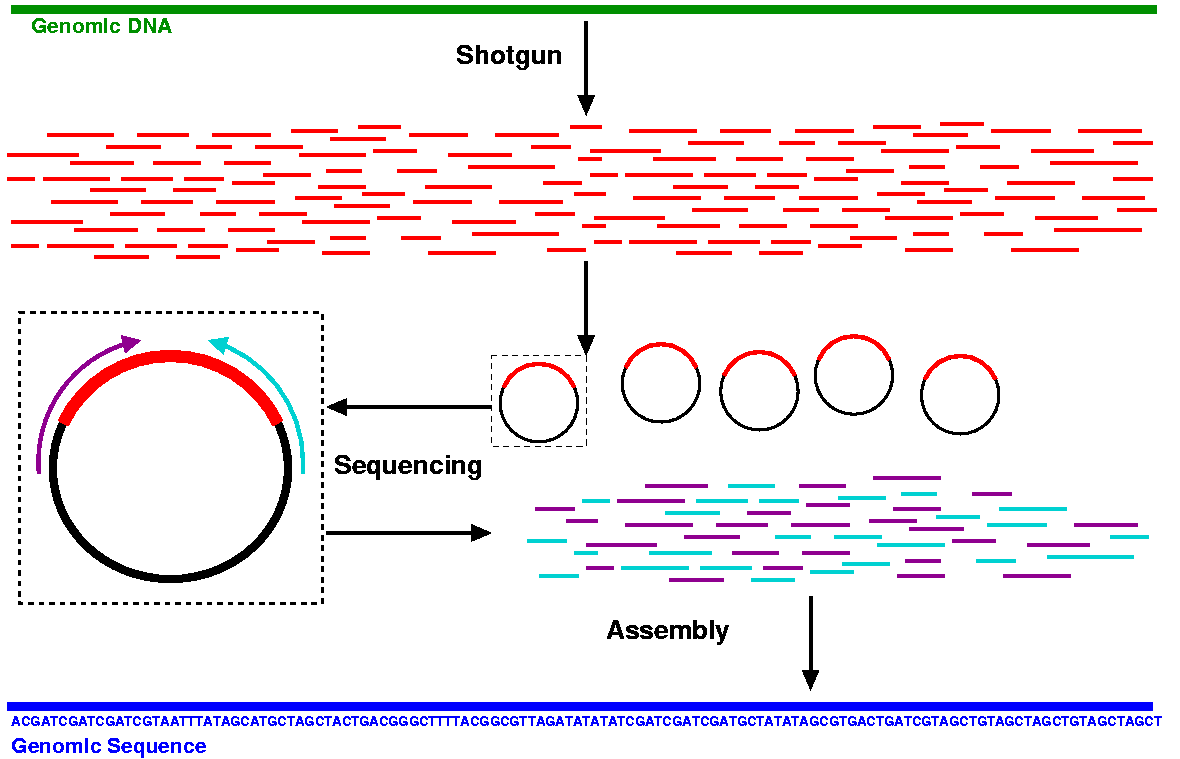

All efforts for a complete analysis of almost every genome start by reading the DNA sequence of the whole organism. Ideally, the complete correct order of the four base pairs A, T, G, and C has to be determined before any further research can be initiated (i.e. the complete and correct DNA sequence is vital for a correct gene prediction based on characteristic DNA features. Nowadays, whole genome sequencing is either done by a hierarchical (map based) sequencing approach (see figure 1) or by whole genome shotgun sequencing (see figure 2)

Figure 1: The hierachical sequencing strategy first splits the genome into pieces of approximately 40 to 200 kb. These pieces are then cloned into large insert libraries (e.g. BACs, YACs, cosmids, fosmids). From the huge number of insert clones a minimal tiling path is created, selecting a subset of clones that cover the genome with minimal overlap between the individual clones. Since a map of clones is used, this approach is sometimes referred to as map based shotgun. The individual clones are sequenced using a shotgun approach for each one.

Figure 2: For whole genome shotgun sequencing, the genome is split into a multitude of fragments of approximately 1 to 12 kB (shotgun phase). The resulting fragments are then cloned into sequencing vectors and transformed in bacterial cells (usually E. coli). The so-called vectors are small replicons that include a "multiple cloning site" where the fragments can be inserted. The fragment is thus flanked by the well known sequence of the vector and this sequence can be used to define a sequencing primer. This primer binds to the DNA of the vector. Two primers are used, yielding two sequences per "insert", a forward and a reverse sequence. Then the resulting DNA sequences can be assembled. Using overlaps between the individual sequences, an attempt is made to determine the genomic sequence from the sets of fragments.

While the hierarchical approach first splits up the genomic DNA into a set of clones which have to be ordered based on their overlapping ends along the minimal tiling path, the shotgun approach simply cuts the whole genome into a large number of small fragments which are then sequenced and re-assembled.

Especially the whole genome shotgun approach depends on efficient assembling algorithms and requires considerable hard- and software support. In general, minimizing the manual effort for the shotgun approach by automated high-throughput sequencing pipelines has greatly decreased the cost for whole genome sequencing projects. After the sequencing and assembly phase, the obtained genomic sequence (usually a small number of contigs) has to be finished by closing the gaps between the contigs. Furthermore, the genome has to be polished in order to improve the quality of the consensus sequence. Finally, the complete genomic DNA sequence is ideally obtained in a single large contig as a basis for all further research. Although the completion of the sequencing phase in a genome project is always an important step towards understanding the genome and the basic genetic principles behind, the DNA sequence is actually just the starting point for large scale downstream analysis.

Finding genes - region prediction

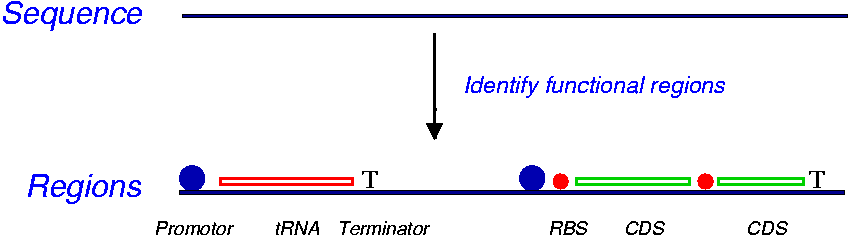

The first step towards a detailed analysis of the DNA sequence in any genome is the identification of potentially functional regions like protein coding sequences (CDS) and other functional non-coding genes like transfer RNAs (tRNAs), ribosomal RNA genes (rRNAs), ribosomal binding sites (RBS), etc. Thereby, the prediction of such regions can be considered the most important task leading to the development of various approaches for gene prediction.

Due to their coding potential, the protein coding sequences in a bacterial genome typically exhibit certain, characteristic sequence properties which distinguish them from non-coding Open Reading Frames (ORFs) in the sequence. An additional useful property for gene identification is sequence homology of a potential coding region to genes of other organisms. Ab initio or intrinsic gene-finders exclusively use the statistical analysis of sequence properties (e.g. Hidden Markov Models) to distinguish real protein coding CDSs from ORFs. Examples for these ab initio gene-finders in prokaryotic sequence data are e.g. Glimmer (Gene Locator and Interpolated Context Modeller) or ZCURVE. Programs like Critica (Coding Region Identification Tool Invoking Comparative Analysis) and Orpheus which additionally use homology-based information for gene prediction are also called extrinsic gene-finders.

Figure 3: Prediction of functional regions. Protein coding sequences (CDS) as well as other functional non-coding genes (tRNAs, rRNAs, promotors, terminators, etc.) can be identified by analyzing characteristic sequence properties.

For the prediction of other non-coding regions of interest such as tRNAs, rRNAs, signal peptides, etc. a number of tools exist at different levels of quality (tRNAscan-SE, SignalP, helix-turn-helix, TMHMM, etc.). Some of the obtained predictions are also strongly related to functional assignments for the identified regions so that it is not always possible to clearly distinguish the prediction of region and function.

An objective evaluation of the predictive accuracy of different gene-finders is difficult since an experimentally verified annotation for all genes of a bacterial genome does not yet exist (even for E. coli, only a few hundred genes have been verified experimentally by now). Therefore, the current state-of-the-art is the comparison with available genome annotation data, which more or less reflects the manual annotation work of human experts. The reliability of these kinds of annotations varies, however, and depends heavily on the methods used and the manual effort involved in the annotation process. Furthermore, the state of the experimental knowledge concerning the respective organism differs quite a lot and thus reflects a certain degree of reliability for a given annotation. Nevertheless, the success of one or another gene prediction strategy can be evaluated to some degree by comparing the number of predicted genes to the number of genes found in an existing annotation and by calculating the selectivity and sensitivity for the gene numbers obtained.

Prediction of functions

After identifying the regions of interest in the genomic sequence, researchers find themselves confronted with the challenging task of assigning potential functions and biological meaning to more or less unimposing parts in the genomic sequence. Since the cost and manual effort for detailed wet lab experiments on each of these regions would clearly exceed the resources of every genome project, bioinformatics tools have been implemented that allow an automated prediction of potential gene functions.

Many of these tools rely on different strategies that compare unknown sequences to DNA or protein sequences that have already been determined by researchers in the past 20 years. Almost all of them have been deposited in a number of so-called sequence databases (from a computer scientist's point of view these are merely data collections). The most current list of these sequence repositories can be found either in the first issue of NAR (Nucleic Acid Research) each year or on the web via one of the different sequence retrieval servers (e.g. via this SRS server).

While we can easily query these sequence databases for a gene with a specific name, the naming of genes is by no means consistent and each gene may have several names. So one reason for doing database searches based on sequence similarity is the chaotic state of the sequence databases.

The most important reason for performing similarity searches is the determination of putative functions for newly sequenced stretches of DNA. By comparing the new sequences to the databases of "well known" sequences and their "annotations", we can derive a putative gene function.

If we find a database "match" for a new sequence, we can assume that the function of our new sequence may in fact be related to that of our match. This is based on a dictum by Carl Woese who stated that:

- Two proteins of identical function will have a similar protein structure, because protein structure determines the protein function.

- Two proteins of similar structure will have similar amino acid sequences.

- Two similar amino acid sequences will have some degree of DNA sequence similarity.

- Thus from a similar DNA or amino acid function a similar protein function might be inferred.

Although this is true for many proteins, it should be clearly stated that even small changes in the DNA sequence can render the gene product useless or completely change its function. In contrast to similarity in function, the term homology indicates a genetic relationship based on correspondence or relation in the type of a structure (here in the DNA or amino-acid sequence itself).

Unfortunately, a "match" in a DNA or protein database needs to be interpreted; the uninitiated may mistake a chance hit (the databases are very large) with a meaningful "match".

Prominent and commonly applied tools like BLAST or FASTA compare the DNA or amino-acid query sequence with huge databases of collected already known sequences by computing alignments. The results of these tools are supposed to reflect the degree of similarity between two genes in different organisms thus following the thesis that the same (or similar) gene function should have an (almost) identical underlying genomic sequence. Although these comparisons often reveal the homology among evolutionary related organisms, the results have to be interpreted carefully since they can only be as reliable as the database entry itself.

Other tools like Pfam, Blocks, iPSORT, and PROSITE are based on (manually) curated motif or domain databases that allow the classification of proteins based on hidden markov models and other techniques. Recently developed tools like InterPro also combine the results of several other applications thus trying to compute more reliable and quite exact predictions that classify partial genomic sequences.

Genome annotation

Annotation is generally thought to possess best quality when performed by a human expert. The large amounts of data which have to be evaluated in any whole-genome annotation project, however, have led to the (partial) automation of the procedure. Hence, software assistance for computation, storage, retrieval, and analysis of relevant data has become essential for the success of any genome project. Genome annotation can be done automatically (e.g. by using the "best Blast hit") or manually. The latter is supposed to possess a higher quality but on the other hand takes much more time. However, to be sure about the "real biological function", each annotation of a gene would have to be confirmed by wet lab experiments.

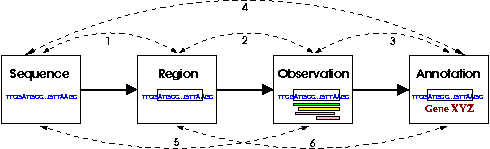

Figure 4: Traditional flowchart of a genome annotation pipeline. The process of genome annotation can be defined as assigning a meaning to sequence data that would otherwise be almost devoid of information. By identifying regions of interest and defining putative functions for those areas, the genome can be understood and further research may be initiated. Since genome annotation is a dynamic process, the arrows indicate different mutual influences between the different steps. For example, the region prediction (1), the computation of observations (5), and the annotation (4) depend on the quality of the sequence (because of frameshifts etc.). On the other hand, "surprising" observations (2) or inconsistencies that were discovered during the annotation (6) may require updates of the region prediction. Changes of a region will thus produce new observations which have to be considered carefully for a novel annotation (3).

Figure 4 shows the flowchart of an often employed genome annotation pipeline also displaying the interactions and dependencies between the single steps: e.g. a correct gene prediction depends heavily on the quality of the genomic sequence. Vice versa questionable predictions of regions can help to identify sequencing errors (e.g. frameshifts) that require further improvement of the sequence itself in some positions.

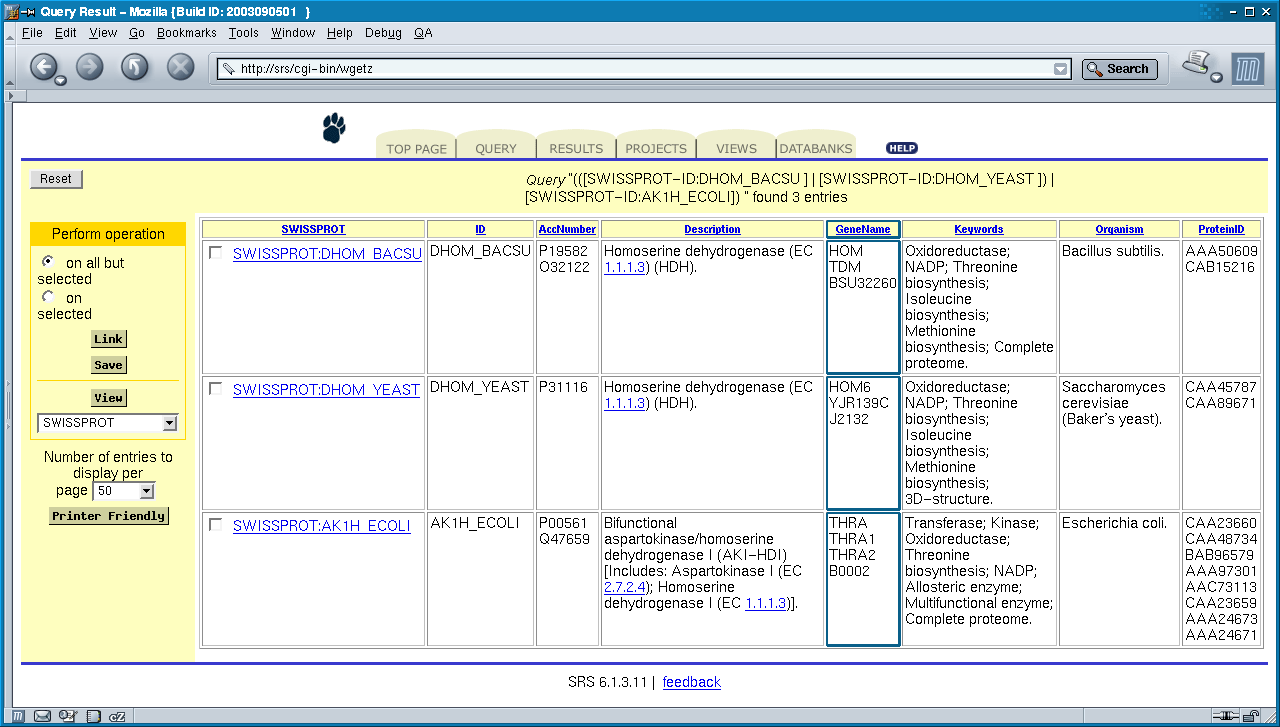

Another important aspect for the success of any genome annotation project is the use of a consistent nomenclature when assigning gene names. Comparing just a few existing genome annotations shows that there is no commonly used systematic naming scheme: for example, the genes coding for the enzyme homoserine dehydrogenase are named completely different in the corresponding annotations for E. coli (THRA or THRA1 or THRA2 or B0002), B. subtilis (HOM or TDM), and S. cerevisiae (HOM6 or YJR139C or J2132) as illustrated in figure .

Figure 5: Searching for a homoserine dehydrogenase in the database using the SRS system results in a number of hits for various organisms. The hits shown here illustrate that for only three organisms 9 different gene names were assigned.

They can only be identified as the same encoded enzyme because each database entry is additionally mapped onto the same enzyme classification number EC 1.1.1.3. This does not only prevent simple comparisons between different organisms but also complicates the identification of genes with the same or similar function. Using a standardized vocabulary like the Gene Ontologies (GO) might therefore be one of the most fruitful efforts towards a unified standard for genome annotations.